Isabel Neto & Yuhan Hu - Fostering Inclusion Using Social Robots

Fostering Inclusion using Social Robots in mixed-visual ability classrooms using multimodal feedback.

Visually Impaired children are increasingly included in mainstream schools as an inclusive education practice. However, even though they are side-by-side with their sighted peers, they face classroom participation issues, lack of collaborative learning, reduced social engagement, and a high risk for isolation. Robots have the potential to act as intelligent and accessible social agents that actively promote inclusive classroom experiences by leveraging their physicality, bespoke social behaviors, sensors, and multimodal feedback. However, the design of social robots for mixed-visual abilities classrooms remains mostly unexplored. In the presentation, we will share our research on exploring robot multimodal feedback such as movement, speech and textures to foster inclusion in group activities between mixed-visual abilities children.

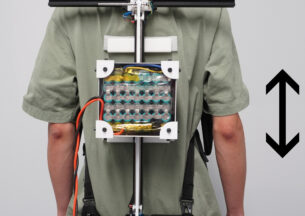

Internal and emotional state expression is at the core of Human-Robot Interaction (HRI), and many social robots are designed to convey their states not only with speech but also through nonverbal signals like facial expressions and body movements. To enable emotion expressions for robots in a manner applicable to different robot configurations, we developed an expressive channel for social robots in the form of texture changes on a soft skin. Our approach is inspired by some biological systems, which alter skin textures to express emotional states, such as human goosebumps and cats’ back fur raising. Adding such expressive textures to social robots can enrich the design space of a robot’s expressive spectrum: it can interact both visually and haptically, and even communicate silently, for example in low-visibility scenarios. The soft robotic skin generates pneumatically actuated dynamic textures, deforming in response to changes in pressure inside fluidic chambers. In the presentation, we will share the mechanical design of such robotic textures as well as user studies of mapping texture changes to different emotional states.

Host: Sarah Sebo

This event will be both in-person and remote. For Zoom information, sign up for the HCI Club mailing list.

Speakers

Isabel Neto

I am a Ph.D. student in Computer Science and Engineering, University of Lisbon, and researcher in GAIPS (Research Group on AI for People and the Society) at INESC-ID and in Interactive Technologies Institute / LARSyS; with a focus on social agents for inclusion in schools.

My research focused on novel experiences between mixed-visual ability children, exploring group engagement within Human Robots Interactions, improving accessibility, and valuing individual abilities to create inclusive experiences.

With 20 + years of experience in senior leadership, design, business, people, and change in the IT and Telecommunications Industry, embraced several technical and management areas in Digital, Customer Operations, and IT. Collaborated with many talented people having a long experience in a customer-centric approach, leading design thinking methods and agile teams to build and develop personalized and ethical experiences in digital channels & assisted channels

I aim to use artificial intelligence, design thinking, and service design to shape great experiences, products & services.

Yuhan Hu

Yuhan Hu is a 3rd year Ph.D student in the Human-Robot Collaboration & Companionship (HRC2) Lab at Cornell, working on ShadowSense and human-robot collaborative swarms. She previously worked on texture-changing social robotics.